GPT-4 Empowers AI Era in Content Control

Content moderation has consistently been recognized as a significant challenge on the internet. Due to the subjective nature of deciding which content is appropriate for a particular platform, even experts struggle to effectively manage this process.

Nevertheless, OpenAI, the organization behind ChatGPT, is confident it can provide support in this domain.

Content moderation gets automated

For many years, content moderation has been considered one of the internet’s most daunting challenges. The subjective nature of determining acceptable content for a specific platform complicates the management process, posing difficulties even for professionals.

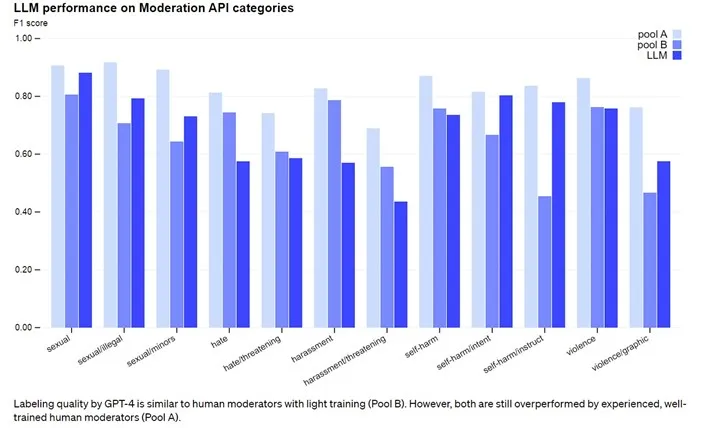

OpenAI, known for developing ChatGPT, is exploring a potential solution. As a leader in artificial intelligence, OpenAI is evaluating the content moderation capabilities of its GPT-4 model.

The company’s objective is to utilize GPT-4 to establish a scalable, consistent, and adaptable content moderation system. The aim extends beyond merely aiding in content moderation decisions; it also includes facilitating the development of policies. Consequently, the process of implementing targeted policy adjustments and creating new policies could be significantly accelerated, reducing timeframes from months to just hours.

The model is reportedly able to understand various rules and subtleties in content policies and can immediately adjust to any changes. OpenAI argues that this capability leads to more consistent tagging of content. In the future, it might become feasible for social media platforms such as X, Facebook, or Instagram to completely automate their content moderation and management practices.

Anyone with access to OpenAI’s API can already employ this method to develop their own AI-driven moderation system. Additionally, OpenAI suggests that its GPT-4 audit tools could enable companies to achieve what would normally take about six months of work in just a single day.

Important for human health

Manual review of traumatic content on social media, especially, is known to have significant effects on the mental health of human moderators.

In 2020, for instance, Meta agreed to pay at least $1,000 each to more than 11,000 moderators for potential mental health issues stemming from their review of content posted on Facebook. Using artificial intelligence to alleviate some of the load on human moderators could be highly beneficial.

However, AI models are far from flawless. It’s well known that these tools are susceptible to errors in judgment, and OpenAI acknowledges that human involvement is still necessary.